Tech Topic | Hearing Review September 2014

By Francis Kuk, PhD, and Petri Korhonen, MS

An introduction to localization, factors that influence localization when wearing hearing aids, and what steps can be taken to improve localization ability for hearing aid users.

Localization is the ability to tell the direction of a sound source in a 3-D space. The ability to localize sounds provides a more natural and comfortable listening experience. It is also important for safety reasons such as to avoid oncoming traffic, an approaching cyclist on a running path, or a falling object. Being able to localize also allows the listener to turn toward the sound source and use the additional visual cues to enhance communication in adverse listening conditions.

Hearing loss results in a reduction of localization ability1; however, the use of amplification may not always restore localization to a normal level. Fortunately, the application of digital signal processing and wireless transmission techniques in hearing aids has helped preserved the cues for localization.

How Do We Localize?

A sound source has different temporal, spectral, and intensity characteristics depending on its location in the 3-D space. A listener studies these characteristics (which are described as cues) originating from all directions on the horizontal and the vertical planes to identify the origin of the sound.

Horizontal plane. Localization on the horizontal plane involves comparison of the same sound received at the two ears (ie, binaural comparison for left/right) or between two surfaces of the same ear (ie, front/back).

- Left/right: The head casts a shadow on sounds that originate from one side of the head. This head-shadow effect results in a change of intensity level (interaural level difference, or ILD) and time of arrival (interaural time difference, or ITD) of the same sounds at the opposite ear. For sounds below 1500 Hz, where the wavelengths of the sounds are greater than the dimension of the head, the head causes a significant time delay for sounds to travel from one side of the head to the other. This ITD is recognized as a cue in localization of sounds below 1500 Hz. Above 1500 Hz, where the effect of the time delays is less significant, the intensity difference of the sounds between ears, or ILD, has been listed as a cue for localization.2

- Front/back: The pinna casts a shadow on sounds that are presented from behind the listener. Indeed, the amplitude of sounds above 2000 Hz that originate from the back of the listener is about 2-3 dB lower than sounds that originate from the front as measured at the ear canal entrance. This spectral difference is accepted as an additional cue to aid in elevation judgments and front/back discrimination.2

Vertical plane. Byrne and Noble1 demonstrated that sound sources striking at the pinna from different elevations result in a change in spectra of the sound, especially above 4000 Hz. This change in spectral content may provide a cue on the elevation of a sound source.

Monaural versus binaural cues. Because localization typically involves the use of two ears, people with hearing in only one ear (either aided monaurally or single-sided deafness) should have extreme difficulties on localization tasks. However, some people in this category still report some ability to localize. Frequently, a person moves their head/body to estimate the direction of a sound. The overall loudness of the sound and the spectral change of the sound as it diffracts the pinna from different orientations act as cues. This spectral cue relies on a stored image of the sound, so cognition is involved with monaural cues.3

Is Localization a Problem?

Physiological structures in the brainstem related to localization. The superior olivary complex (SOC) in the pons is the most caudal structure to receive binaural inputs. This suggests that the low brainstem is the most critical to binaural interaction.

Any lesion that affects input to the SOC could disrupt the cues for localization. Because cognitive factors (including memory, expectations, and experience) also could affect localization, it is possible that localization performance is not easily described by lesions just to one structure, but to many structures within the brain. It also makes it difficult to predict localization performance through the audiogram and any one set of information.4

Prevalence and ramifications. One conclusion from almost every study is that hearing-impaired people have poorer localization ability than normal-hearing people. The use of amplification may improve localization in that it provides the needed audibility. However, it is common to find that aided localization is poorer than unaided localization when tested at the wearer’s most comfortable listening level (ie, audibility is not involved).

In general, left/right discrimination is only mildly impaired in hearing-impaired listeners; front/back discrimination is consistently impaired1 to the point that it is poorer than the unaided condition.5

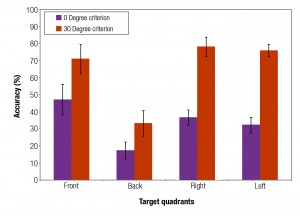

These observations were replicated in our internal study at the ORCA-USA facility. Figure 1 shows the accuracy of localization for sounds presented from 12 azimuths in the unaided condition at a 30 dB SL (sensation level) in 15 experienced hearing aid wearers. For ease of presentation, the results across the 12 loudspeakers were collapsed to only front, back, left, and right quadrant scores. The results of using two scoring criteria were reported: “0 degree” criterion requires absolute identification and a “30 degree” criterion suggests that the subjects’ response may be ±1 loudspeaker from the target sound source.

There are two clear observations. First, localization accuracy for sounds is impaired in this subject population. Even with the 30 degree criterion, subjects were only 60-70% accurate in localizing sounds from the front, left, and right. With a 0 degree criterion, it is only between 30% and 45%. Second, localization of sounds from the back was extremely poor. A score of 30% was seen with the 30 degree criterion; the percentage dropped to 20% when a 0 degree criterion was used.

Several authors have suggested that being able to focus one’s attention to a sound source in an adverse listening environment (eg, cocktail party) may help improve speech understanding and communication.6,7 Furthermore, Bellis4 indicated similar processing mechanisms are used in the brainstem for speech in noise task and localization task. There have been bimodal studies8 (eg, one cochlear implant and one hearing aid) that demonstrated a significant correlation between localization ability and speech recognition in bimodal and bilateral cochlear implant subjects. If this can be generalized to other hearing-impaired patients, it would suggest that an improvement in localization could result in an improvement in speech understanding in noise or communication in adverse listening conditions. It is unclear if improving speech-in-noise performance may lead to an improved localization performance. Currently, neither one of these hypotheses has been validated.

Factors Affecting Aided Localization Ability

![Kuk_localization figure 1]()

Figure 1. Unaided localization accuracy of experienced hearing aid wearers (N=15) for sounds originating from 4 quadrants at a 30 dB SL input level and scored using two criteria (0 degree and 30 degree).

Despite the use of amplification, aided localization is still poorer than that of normal hearing listeners.9 In some cases, aided localization ability may be even poorer than unaided localization when tested at the same sensation level.

This has two important implications. First, audibility is not the only issue that governs localization ability. Otherwise, ensuring audibility by testing at the same sensation level should restore localization to a normal level for all wearers. Additional means to restore localization are also needed. Second, the manner in which amplification is applied could affect the aided localization performance. This is seen in the poorer aided performance than the unaided performance.

From the device manufacturer’s perspective, it is important to understand the reasons for the poorer performance, and design better algorithms so the aided performance can be as “normal” as possible. The following are some reasons for the poorer aided performance.

1) Hearing aids cannot overcome the effects of a distorted auditory system. Hearing loss is not simply a problem of sound attenuation or loss of sensitivity; it is also a problem of sound distortion. While a properly fit hearing aid may overcome the loss of hearing sensitivity and improve localization because audibility is restored, the effect of the distortion still lingers. Such distortion may affect the integrity of the aided signal reaching the brain, rendering the effective use of the available cues impossible or suboptimal.

As the degree of hearing loss increases, the extent of the distortion will likely increase.1 However, it is not possible to predict the degree of distortion/difficulties based only on the degree of hearing loss.

2) The wearers have developed compensatory strategies. Hearing loss deprives the brain of the necessary acoustic inputs. This would lead the listeners to develop alternative compensatory strategies that do not require the “correct cues.” They may use other sensory modalities (eg, head and body movement, visual searching) to help them localize. Thus, the newly amplified signals may not be “recognizable” to the brain. This would lead to continued localization errors unless the brain is retrained to interpret the new input.

For example, Hausler et al10 reported degraded localization in people with conductive hearing loss, and that such abnormality persisted for some time after hearing thresholds were returned to normal through surgery. The new cues could be useful when the wearer has learned a new strategy to use the available cues.

3) Loss of natural cues, including:

- Binaural cues. Normal localization depends on cues provided by both ears (and not just one ear). A person who is aided monaurally (despite hearing loss in both ears) would have significantly more localization difficulties than if he or she is aided in both ears. For example, Kobler and Rosenhall11 noted a bilateral hearing aid advantage over unilateral hearing aid use for localization. This suggests that bilateral amplification will likely yield better localization than monaural amplification. However, amplification is not guaranteed to improve over the unaided localization ability even when audibility is ensured.

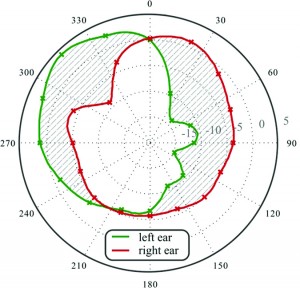

- Interaural cues from signal processing. It was mentioned previously that the normal ears utilize the interaural time difference (ITD) and the interaural level difference (ILD) cues to localize sounds presented to the sides. However, these natural interaural differences could be altered with the use of a wide dynamic range compression (WDRC) hearing aid. In the case where a sound originates from one side (say, right), the same sound would appear at a much lower intensity level at the opposite ear (left), brought about by the head-shadow effect. This natural difference in intensity level signals the location of the sound (Figure 2).

![Kuk_localization figure 2]()

Figure 2. Inter-aural level difference in the unaided condition.

When a pair of hearing aids that provide linear processing is worn, the same gain is applied to both ears. This preserves the inter-aural level difference. A WDRC hearing aid provides less gain to the louder sound but more gain to the softer sound (the opposite ear). This will reduce the interaural level difference between ears and could affect the accuracy of sound localization to the sides of the listener. For example, Figure 3 shows that the interaural level difference may decrease from 15 dB to 10 dB from the use of fast-acting WDRC hearing aids.

![Kuk_localization figure 3]()

Figure 3. Inter-aural level difference in a fast acting WDRC hearing aid.

The components of a hearing aid (including the DSP chip) could introduce delay to the processed signal. The impact of the delay is minimal if the extent of the delay is identical between ears. However, if the delay is different between the two ears, the time of arrival (or phase) will be different, and that may result in a disruption of the localization cues.

Drennan et al12 determined the ability of hearing-impaired listeners to localize and to identify speech in noise using phase-preserving and non-phase-preserving amplification. At the end of 16 weeks of use, speech understanding in noise and localization was demonstrated to be slightly better with the phase-preserving processing than non-phase- preserving processing.

- Pinna cues. It was mentioned previously that the pinna casts a shadow to sounds, especially the high frequency ones that originate from the back. This changes the spectrum of the sounds at the entrance of the ear canal. This spectral change is used as a cue to distinguish between front and back.

Hearing aids that destroy this pinna cue could result in front/back localization problems. Because the microphones of a BTE/RIC are typically over the top of the ear and are not shadowed by the pinna, wearers of BTE/RICs are more likely to be deprived of this pinna cue. Because the microphones of custom products (CIC/ITE/ITC) are at the entrance of the ear canal and are shadowed by the pinna, it is likely that wearers of these hearing aid styles will still retain the pinna cues. Indeed, Best et al13 compared the localization performance of 11 listeners between BTE and CIC fittings and found fewer front/back reversal errors with the CIC than the BTE. The loss of pinna cues could affect a significant number of hearing aid wearers because over 70% of hearing aids sold in the United States are BTE/RIC styles.14

- Spectral cues. The use of occluding hearing aids reduces the magnitude of high frequency sounds that could signal a change in elevation of the input signal. Furthermore, even when the microphone picks up the high frequency sounds, the localization cues may still not be audible if the output bandwidth of the hearing aid is limited.

So, What Can Be Done to Improve Localization?

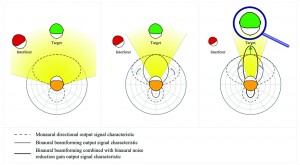

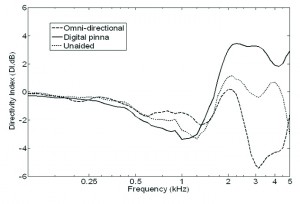

![Kuk_localization figure 4]()

Figure 4. In-situ directivity index (DI) of an omnidirectional microphone on a BTE, of the natural ear with pinna, and of the pinna compensation algorithm microphone system used in the Dream.

One must provide the necessary acoustic cues so the impaired auditory system has as much accurate information to process as possible. Appropriate training may be necessary to retrain the altered brain to interpret the available information.

1) Bilateral hearing aid fitting. The first and most important requirement to receiving binaural cues is to wear hearing aids on both ears. In addition, the hearing aids should be very similar to avoid mismatching between devices, which can create unnecessary differences in delays.

2) Wireless hearing aids with inter-ear connectivity. One way to preserve the ILD is the use of wireless technology that allows communication between hearing aids of a bilateral pair. Recently, near-field magnetic induction (NFMI) technology has been applied in hearing aids such that the compression settings on both hearing aids of a bilateral pair are shared.15 In the Widex Dream hearing aids, a 10.6 MHz carrier frequency is used to transmit information between hearing aids of a bilateral pair 21 times per second (or <50 ms/transmission). When a sound is identified to be on one side of the listener, the gain on the opposite ear will be adjusted to preserve the ILD. This feature is called inter-ear compression (IE).

Korhonen et al16 studied the impact of the inter-ear compression on trained hearing impaired subjects’ ability to localize sounds (n=10). The comparison was made to a BTE with an omnidirectional microphone. The use of IE reduced the error rate of localizing sounds to the sides by 7.3°.

3) Restoring the pinna cues. It was pointed out earlier that the pinna cues are preserved in the custom products, but could be compromised in BTE/RIC hearing aids that use an omnidirectional microphone. Thus, pinna cues should only need to be preserved in BTE/RIC products.

Figure 4 shows the in-situ directivity index of the pinna (unaided) and that of an omnidirectional microphone. It can be seen that the directivity index of the pinna alone is about 2 dB between 2 and 4 kHz. For an omnidirectional microphone, one can see that the DI is altered to as much as -5 dB at 3000 Hz. This means that a 3000 Hz sound from the back could sound 5 dB louder than the same sound presented to the front! So, if one were to restore the directivity index of the pinna, one could perform spatial filtering of the sounds that come from behind so that the microphones have the same or better DI as the pinna (ie, 2 dB or more from 2000 to 4000 Hz).

In the Dream hearing aid, the Digital Pinna feature has a DI of around 4 dB from 2000 to 4000 Hz to restore the pinna shadow. Kuk et al17 reported that the digital pinna algorithm was able to improve front/back location from 30% to 60% over an omnidirectional microphone condition.

By the same logic, one would expect that a directional microphone on a BTE, in addition to providing a SNR improvement, to also improve front/back localization when compared to its omnidirectional counterpart. Chung et al18 compared localization between an omnidirectional microphone and a directional microphone in 8 normal-hearing listeners and 8 hearing-impaired listeners using an ITE style hearing aid. For both groups, the front-back localization performance with a directional microphone was better than the omnidirectional microphone.

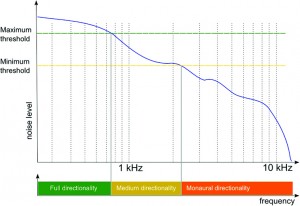

Keidser et al9 examined the localization ability of 21 hearing impaired listeners in four conditions: unaided; aided with omnidirectional; partial directional [omni in lower frequencies and hypercardioid in higher frequencies to compensate for pinna shadow]; and fully directional [hypercardioid in all channels] modes. For stimuli with sufficient mid- and high-frequency content, the fully directional mode improved front/back localization, but had a detrimental effect on left/right localization. The partially directional mode did not have a negative effect on left/right localization and also improved front/back localization. This study demonstrated that the partially directional microphone in a BTE can provide helpful cues for localization.

Van den Bogaert et al19 also showed that ITC and ITP (in-the-pinna) hearing aids have less front/back confusion than BTEs. However, BTEs with a directional microphone were able to preserve some front/back localization.

4) Use of slow-acting compression. One can preserve the natural interaural level difference by keeping linear processing in both hearing aids. This could preserve the intensity differences between ears. However, the disadvantage of a linear hearing aid is that softer sounds will not be audible and the louder sounds may be too loud or it may saturate the output of the hearing aid.

An alternative is to use slow acting WDRC (SA-WDRC). This will keep the short term envelope of the input signal (ie, linear processing) while the hearing aids adjust their gain according to the long term input. This preserves the ILD and the interaural cues. A SA-WDRC also has the advantage of a more natural sound quality.20 It is noteworthy that Widex has been using SA-WDRC since it first introduced the Senso digital hearing aid in 1996.

5) Broad bandwidth and open fit. The use of open fitting allows the natural diffraction of sounds within the concha area and preserves any cues that are used for vertical localization.21 The use of hearing aids with a broad bandwidth (eg, Dream 4-m-CB or Dream Clearband that yields in-situ output up to 10 kHz) provides audibility of high frequency sounds that may help localization.

Unfortunately, an open-fit hearing aid also limits the maximum amount of high frequency gain to less than 40 dB, even with the use of active feedback cancellation. Thus, the use of an open-fit broad bandwidth hearing aid is only possible for individuals with up to a moderate degree of hearing loss in the high frequencies (around 60-70 dB HL at 4000 Hz).21,22

Toward Restoring Localization

In summary, the use of bilateral wireless hearing aids that exchange compression parameters between ears of the custom style (CIC, ITE/ITC) with a large vent and a broad bandwidth should provide the best potential to preserve any localization cues. The availability of a directional microphone may further enhance front/back localization. If BTE/RICs are desired, broadband bilateral wireless devices with inter-ear features and pinna compensation algorithm fitted in an open mode (or as much venting as possible) are appropriate. The addition of a directional microphone also enhances the effectiveness.

What else can be done to improve localization? Despite having the optimal acoustic cues, the brain may have been accustomed to the old pattern of neural activity so that the new cues may decrease performance. What needs to be available is rehabilitation programs that can instruct these wearers how to understand and utilize the new cues.

Wright and Zhang23 provided a review of the literature that suggests localization can be learned or relearned. The reviewed data indicated human adults can recalibrate, as well as refine, the use of sound-localization cues. Training regimens can be developed to enhance sound-localization performance in individuals with impaired localization abilities. This will be further discussed in a subsequent paper on the topic.

References

1. Byrne D, Noble W. Optimizing sound localization with hearing aids. Trends Ampl. 1998;3(2):51-73.

2. Blauert J. Spatial Hearing: The Psychophysics of Human Sound Localization. Cambridge, Mass: The MIT Press; 1997.

3. Durlach N. Binaural signal detection: equalization and cancelation theory. In: Tobias TJ, ed. Foundations of Modern Auditory Theory. New York. Academic Press; 1972:369-462.

4. Bellis T. Assessment and Management of Central Auditory Processing Disorders in the Educational Setting: From Science to Practice. Independence, Ky: Thomson Delmar Learning; 2003.

5. Vaillancourt V, Laroche C, Giguere C, Beaulieu M, Legault J. Evaluation of auditory functions for royal Canadian mounted police officers. J Am Acad Audiol. 2011;22(6):313-331.

6. Arbogast T, Mason C, Kidd G. The effect of spatial separation on informational masking of speech in normal-hearing and hearing-impaired listeners. J Acoust Soc Am. 2005;117(4):2169-2180.

7. Best V, Kalluri S, McLachlan S, Valentine S, Edwards B, Carlile S. A comparison of CIC and BTE hearing aids for three-dimensional localization of speech. Int J Audiol. 2010;49(10):723-732.

8. Litovsky R, Parkinson A, Arcaroli J. Spatial hearing and speech intelligibility in bilateral cochlear implant users. Ear Hear. 2009;30(4):419-431.

9. Keidser G, O’Brien A, Hain J, McLelland M, Yeend I. The effect of frequency-dependent microphone directionality on horizontal localization performance in hearing-aid users. Int J Audiol. 2009;48(11):789-803.

10. Hausler R, Colburn H, Marr E. Sound localization in subjects with impaired hearing. Acta Oto-Laryngol. 1983;[suppl 400]. Monograph.

11. Kobler S, Rosenhall U. Horizontal localization and speech intelligibility with bilateral and unilateral hearing aid amplification. Int J Audiol. 2002;41(7):395-400.

12. Drennan W, Gatehouse S, Howell P, Van Tasell D, Lund S. Localization and speech-identification ability of hearing impaired listeners using phase-preserving amplification. Ear Hear. 2005;26(5):461-472.

13. Best V, Marrone N, Mason C, Kidd G, Shinn-Cunningham B. Effects of sensorineural hearing loss on visually guided attention in a multitalker environment. J Assoc Res Otolaryngol. 2009;10(1):142-149.

14. Strom KE. Hearing aid sales up 2.9% in ’12. Hearing Review. 2013;20(2):6.

15. Kuk F, Crose B, Kyhn T, Mørkebjerg M, Rank M, Nørgaard M, Föh H. Digital wireless hearing aids III: audiological benefits. Hearing Review. 2011;18(8):48-56.

16. Korhonen P, Lau C, Kuk F, Deenan D, Schumacher J. Effects of coordinated dynamic range compression and pinna compensation algorithm on horizontal localization performance in hearing-aid users. J Am Acad Audiol. In press.

17. Kuk F, Korhonen P, Lau C, Keenan D, Norgaard M. Evaluation of a pinna compensation algorithm for sound localization and speech perception in noise. Am J Audiol. 2013;22(6):84-93.

18. Chung K, Neuman E, Higgins M. Effects of in-the-ear microphone directionality on sound direction identification. J Acoust Soc Am. 2008;123(4):2264-2275.

19. Van den Bogaert T, Carette E, Wouters J. Sound source localization using hearing aids with microphones placed behind-the-ear, in-the-canal, and in-the-pinna. Int J Audiol. 2011;50(3):164-176.

![kuk petri]() 20. Hansen M. Effects of multi-channel compression time constants on subjectively perceived sound quality and speech intelligibility. Ear Hear. 2002;23(4):369-380.

20. Hansen M. Effects of multi-channel compression time constants on subjectively perceived sound quality and speech intelligibility. Ear Hear. 2002;23(4):369-380.

21. Byrne D, Sinclair S, Noble W. Open earmold fittings for improving aided auditory localization for sensorineural hearing losses with good high-frequency hearing. Ear Hear. 1998;19(1):62-71.

22. Kuk F, Baeksgaard L. Considerations in fitting hearing aids with extended bandwidth. Hearing Review. 2009;16(10):32-39.

23. Wright B, Zhang Y. A review of learning with normal and altered sound-localization cues in human adults. Int J Audiol. 2006;45[Suppl 1]:S92-98.

Original citation for this article: Kuk F, Korhonen P. Localization 101: Hearing aid factors in localization. Hearing Review. 2014;21(9):26-33.

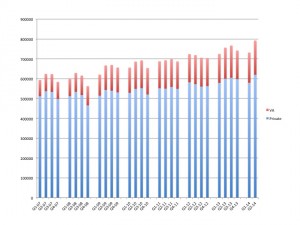

![[Click on figures to enlarge.] Figure 1. binax Narrow Directionality: Binaural processing system composed of three components: Binaural beamforming, binaural noise reduction gain, and a head movement compensation module.](http://cdn.hearingreview.com/hearingr/2014/10/Fig1_binax_jpeg_conv-300x201.jpeg)